python - 為什么用requests庫(kù)能爬取而用scrapy卻不能?

問(wèn)題描述

# -*- coding: utf-8 -*-import requestsdef xici_request(): url = ’http://www.xicidaili.com’ headers = {’Accept’: ’text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8’,’Accept-Encoding’: ’gzip, deflate, sdch’,’Accept-Language’: ’zh-CN,zh;q=0.8’,’Cache-Control’: ’max-age=0’,’Connection’: ’keep-alive’,’Host’: ’www.xicidaili.com’,’Referer’: ’https://www.google.com/’,’User-Agent’: ’Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/56.0.2924.87 Safari/537.36’ res = requests.get(url, headers=headers) print(res.text)if __name__ == ’__main__’: xici_request()

# -*- coding: utf-8 -*-import scrapyfrom collectips.items import CollectipsItemclass XiciSpider(scrapy.Spider): name = 'xici' allowed_domains = ['http://www.xicidaili.com'] headers = {’Accept’: ’text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8’, ’Accept-Encoding’: ’gzip, deflate, sdch’, ’Accept-Language’: ’zh-CN,zh;q=0.8’, ’Cache-Control’: ’max-age=0’, ’Connection’: ’keep-alive’, ’Host’: ’www.xicidaili.com’, ’Referer’: ’https://www.google.com/’, ’User-Agent’: ’Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/56.0.2924.87 Safari/537.36’} def start_requests(self):reqs = []for i in range(1, 21): req = scrapy.Request(’http://www.xicidaili.com/nn/{}’.format(i), headers=self.headers) reqs.append(req)return reqs def parse(self, response):item = CollectipsItem()sel = response.selectorfor i in range(2, 102): item[’IP’] = sel.xpath(’//*[@id='ip_list']/tbody/tr[{}]/td[2]/text()’.format(i)).extract() item[’PORT’] = sel.xpath(’//*[@id='ip_list']/tbody/tr[{}]/td[3]/text()’.format(i)).extract() item[’DNS_POSITION’] = sel.xpath(’//*[@id='ip_list']/tbody/tr[{}]/td[4]/a/text()’.format(i)).extract() item[’TYPE’] = sel.xpath(’//*[@id='ip_list']/tbody/tr[{}]/td[6]/text()’.format(i)).extract() item[’SPEED’] = sel.xpath(’//*[@id='ip_list']/tbody/tr[{}]/td[7]/p[@title]’.format(i)).extract() item[’LAST_CHECK_TIME’] = sel.xpath(’//*[@id='ip_list']/tbody/tr[{}]/td[10]/text()’.format(i)).extract() yield item

代碼如上,為什么requests能返回網(wǎng)頁(yè)內(nèi)容,而scrapy卻是報(bào)錯(cuò)內(nèi)部服務(wù)器錯(cuò)誤500? 請(qǐng)大神解救??

問(wèn)題解答

回答1:并發(fā)你沒(méi)考慮進(jìn)去吧,當(dāng)同一時(shí)間發(fā)起過(guò)多的請(qǐng)求會(huì)直接封你IP

相關(guān)文章:

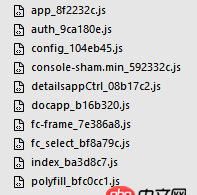

1. javascript - fis3使用MD5但是如何引用?2. 網(wǎng)頁(yè)爬蟲(chóng) - python爬蟲(chóng)翻頁(yè)問(wèn)題,請(qǐng)問(wèn)各位大神我這段代碼怎樣翻頁(yè),還有價(jià)格要登陸后才能看到,應(yīng)該怎么解決3. javascript - JAVA寫(xiě)的H5頁(yè)面能否解釋一下流程4. css - 怎么實(shí)現(xiàn)一個(gè)圓點(diǎn)在一個(gè)范圍內(nèi)亂飛5. python 計(jì)算兩個(gè)時(shí)間相差的分鐘數(shù),超過(guò)一天時(shí)計(jì)算不對(duì)6. javascript - 使用form進(jìn)行頁(yè)面跳轉(zhuǎn),但是很慢,如何加一個(gè)Loading?7. docker-machine添加一個(gè)已有的docker主機(jī)問(wèn)題8. docker-compose中volumes的問(wèn)題9. angular.js - 輸入郵箱地址之后, 如何使其自動(dòng)在末尾添加分號(hào)?10. javascript - 后臺(tái)管理系統(tǒng)左側(cè)折疊導(dǎo)航欄數(shù)據(jù)較多,怎么樣直接通過(guò)搜索去定位到具體某一個(gè)菜單項(xiàng)位置,并展開(kāi)當(dāng)前菜單

網(wǎng)公網(wǎng)安備

網(wǎng)公網(wǎng)安備